TL;DR We propose MM-Diff, a unified and tuning-free image personalization framework capable of generating high-fidelity images of both single and multiple subjects in seconds.

Multi-Domain Tuning-Free Multi-Subject

Recent advances in tuning-free personalized image generation based on diffusion models are impressive. However, to improve subject fidelity, existing methods either retrain the diffusion model or infuse it with dense visual embeddings, both of which suffer from poor generalization and efficiency. Also, these methods falter in multi-subject image generation due to the unconstrained cross-attention mechanism. In this paper, we propose MM-Diff, a unified and tuning-free image personalization framework capable of generating high-fidelity images of both single and multiple subjects in seconds. Specifically, to simultaneously enhance text consistency and subject fidelity, MM-Diff employs a vision encoder to transform the input image into CLS and patch embeddings. CLS embeddings are used on the one hand to augment the text embeddings, and on the other hand together with patch embeddings to derive a small number of detail-rich subject embeddings, both of which are efficiently integrated into the diffusion model through the well-designed multimodal cross-attention mechanism. Additionally, MM-Diff introduces cross-attention map constraints during the training phase, ensuring flexible multi-subject image sampling during inference without any predefined inputs (e.g., layout). Extensive experiments demonstrate the superior performance of MM-Diff over other leading methods.

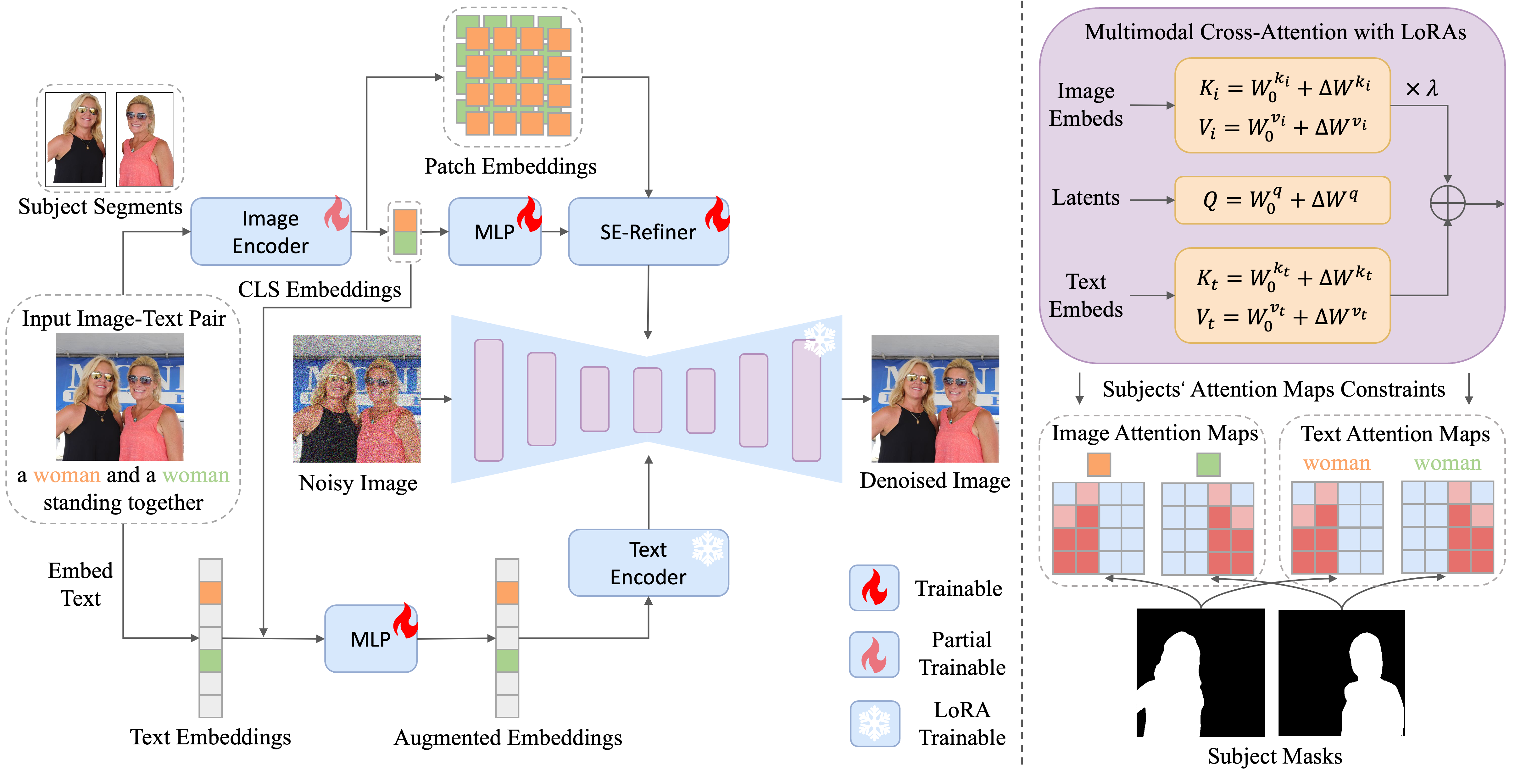

The overall pipeline of the proposed MM-Diff. On the left, the vision-augmented text embeddings and a small set of detail-rich subject embeddings are injected into the diffusion model through the well-designed multi-modal cross-attention. On the right, we illustrate the details of the innovative implementation of cross-attention with LoRAs, as well as the attention constraints that facilitate multi-subject generation.

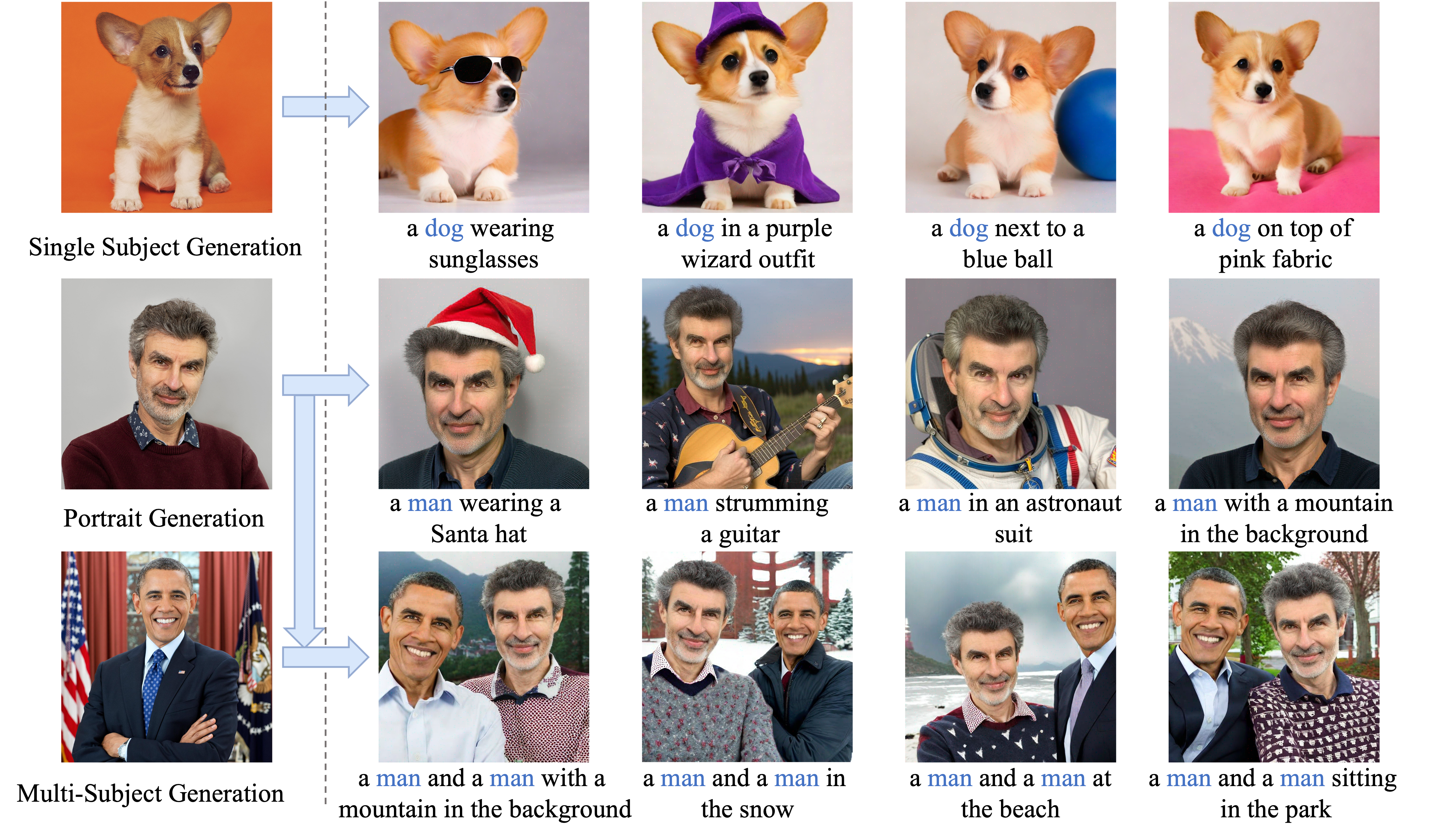

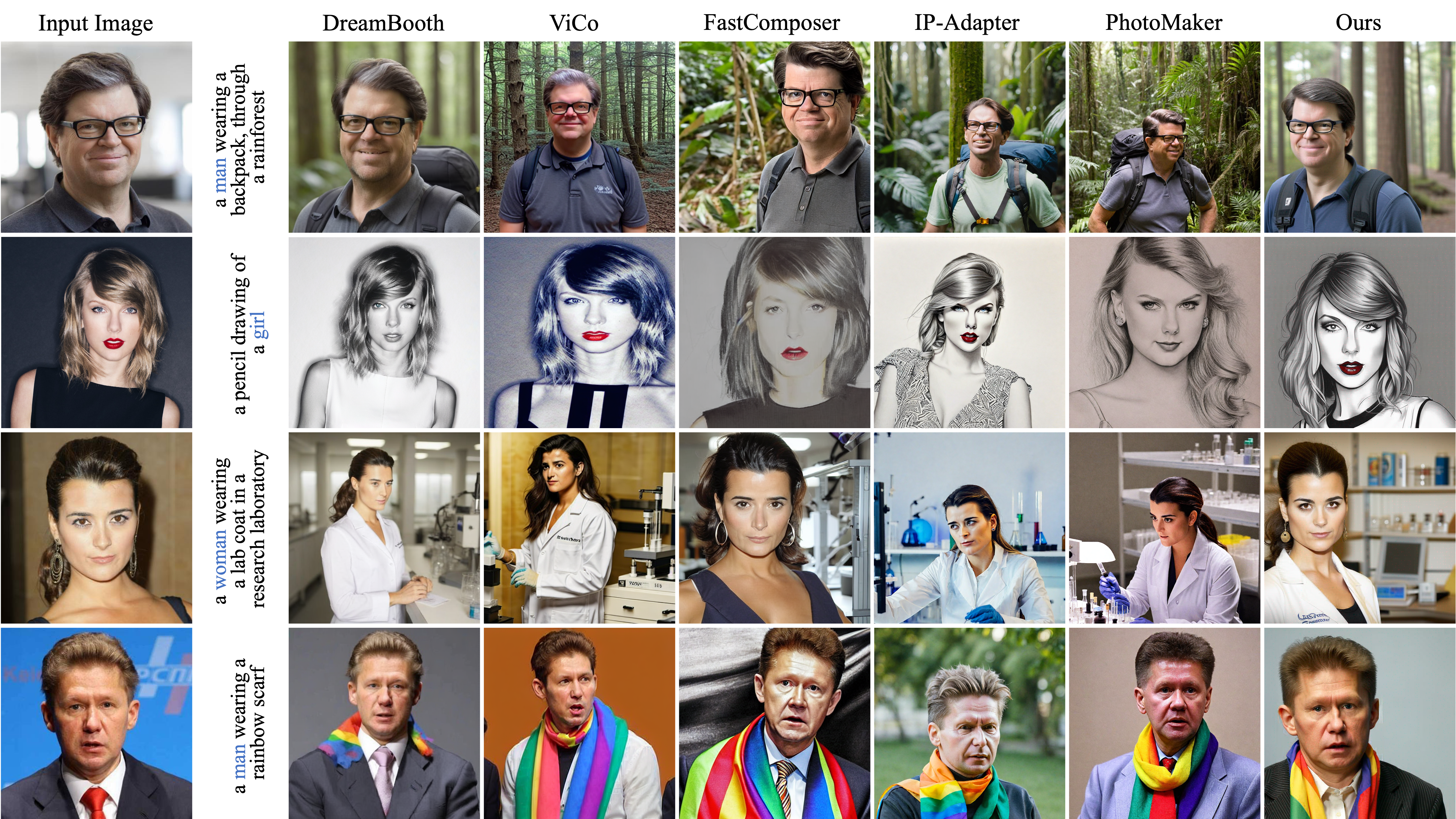

Visual comparisions on single subject generalization.

Visual comparisions on portrait generation.

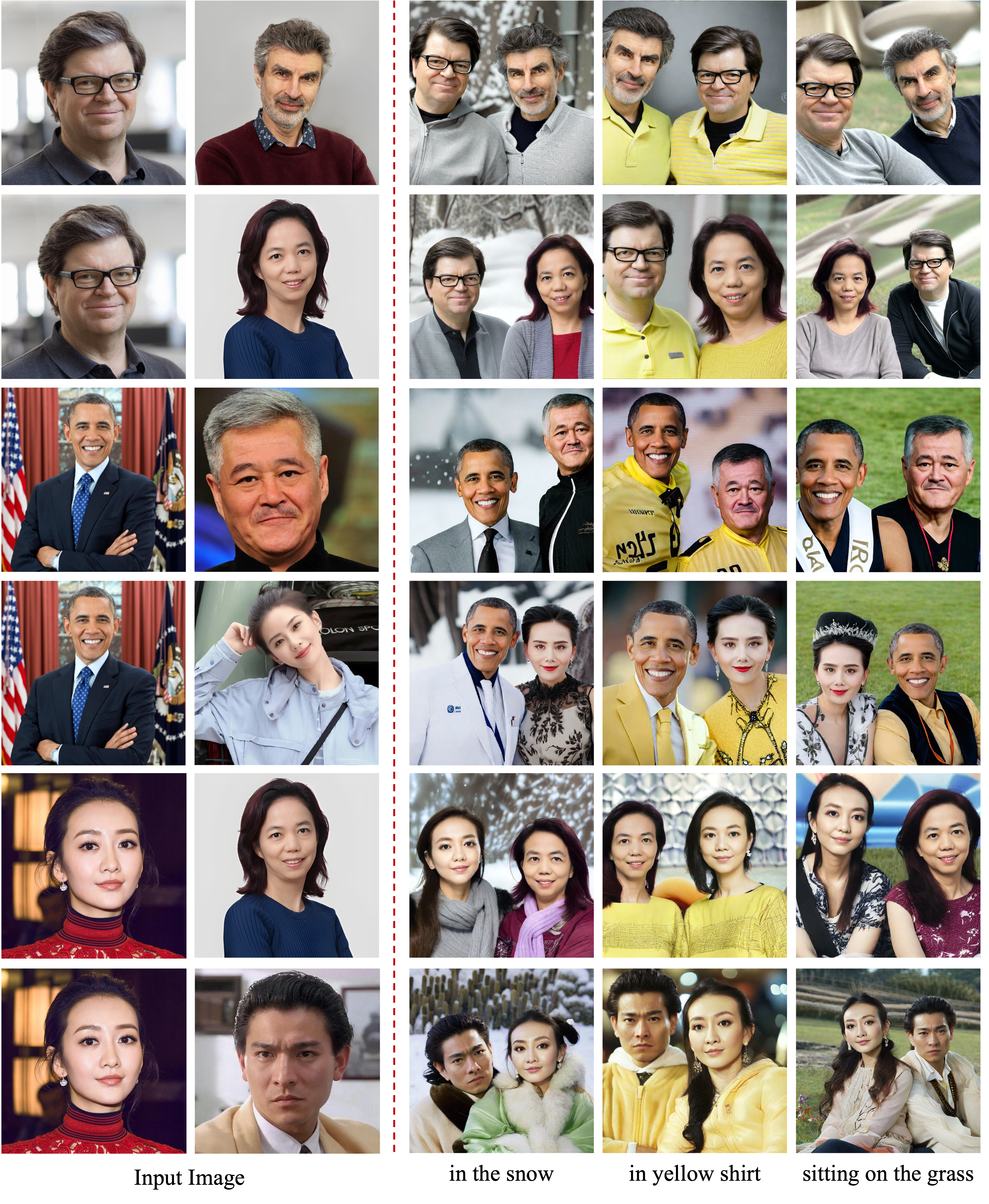

Visual comparisions on multi-subject generation.

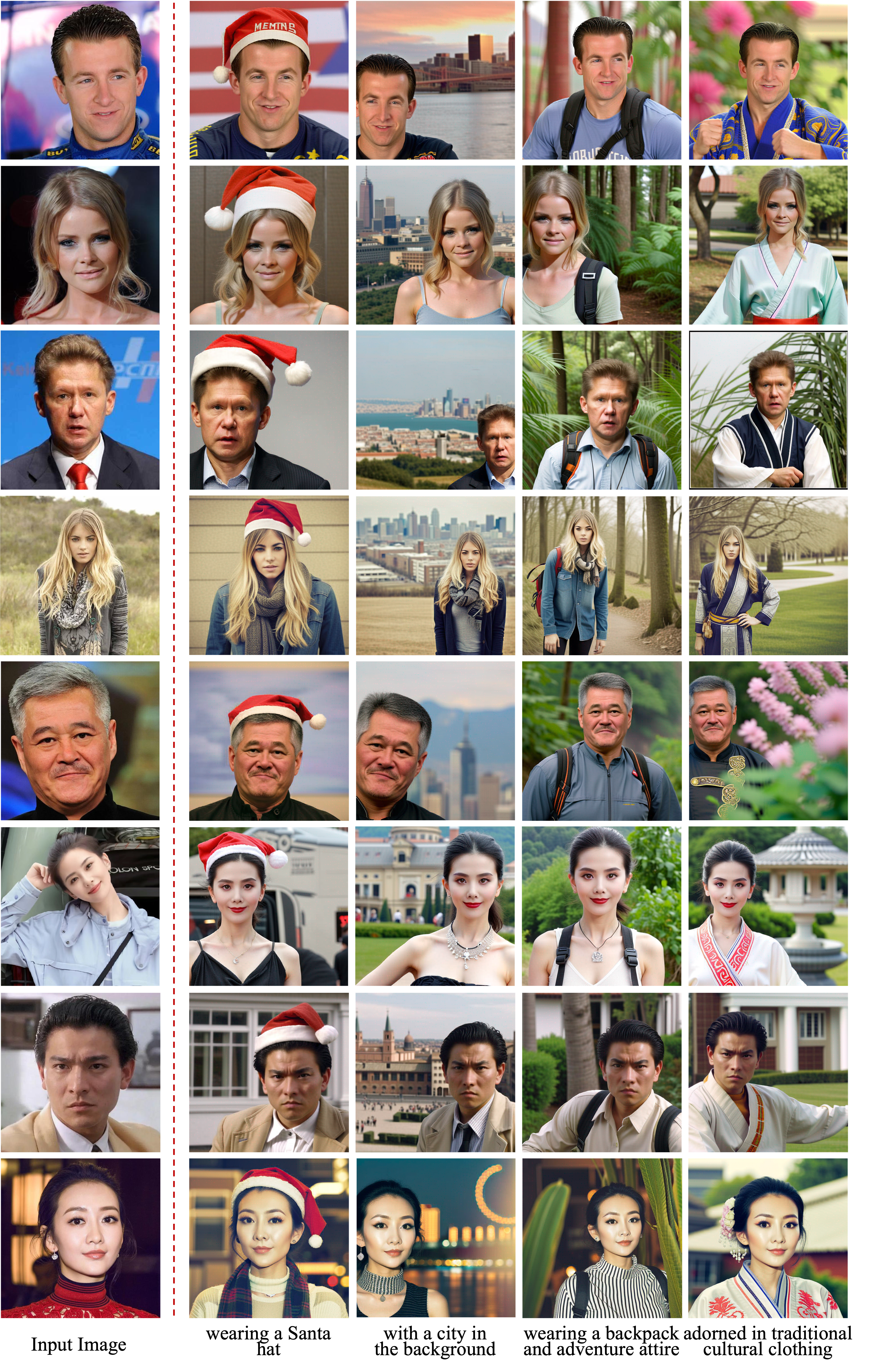

Additional visualization results of portrait generation.

Additional visualization results of multi-subject generation.